Difference between revisions of "Fog Computing"

ArvindIOTF (talk | contribs) m (→Edge computing AI/ML) |

(→To add) |

||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 43: | Line 43: | ||

*[https://www.linkedin.com/pulse/why-iot-needs-fog-computing-ahmed-banafa/ Why IoT needs Fog Computing] | *[https://www.linkedin.com/pulse/why-iot-needs-fog-computing-ahmed-banafa/ Why IoT needs Fog Computing] | ||

*[https://simplicable.com/new/edge-computing 7 Examples of Edge Computing] | *[https://simplicable.com/new/edge-computing 7 Examples of Edge Computing] | ||

| + | *[https://blog.bosch-si.com/bosch-iot-suite/technical-capabilities-of-edge-computing-solution/ Technical capabilities of an edge computing solution: Bosch] | ||

| − | + | ==To add== | |

| + | *[https://www.iotforindia.org/robust-build-management-and-diagnostics-features-with-a-focus-at-the-edge/ Robust build management and Diagnostics features with a focus at the Edge] | ||

| + | *[https://iot.ieee.org/newsletter/march-2020/bringing-machine-learning-to-the-deepest-iot-edge-with-tinyml-as-a-service TinyML at edge] | ||

| + | *[https://www.oreilly.com/radar/tinyml-the-challenges-and-opportunities-of-low-power-ml-applications/ tinyML challenges] | ||

[[Category:IoTStack]] | [[Category:IoTStack]] | ||

Latest revision as of 11:44, 3 June 2020

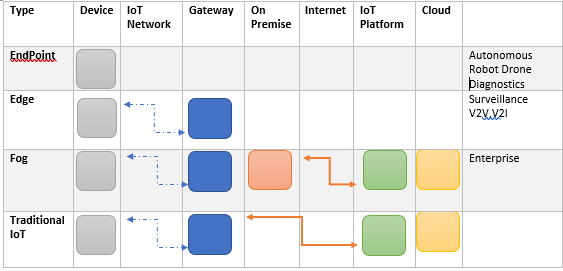

Fog computing was coined by CISCO and envisages multiple compute points in an IOT network. Instead of the cloud being the sole compute engine gateways and other network infrastructure may have the resources ( being full fledged Linux devices) to pre process the data before sending to back end applications or sometime even processing it locally. Fog is different from the old Distributed Computing in the sense that many different compute engines of different capacity collaborate in near real time ( multiple gateway, On premise and cloud ). A common usage is in network surveillance and malware detection

Edge computing by contrast implies only one local compute point (the endpoint) which is connected but did not use of cloud or on premises resources in responding to an event. Local inference in terms of AI

Fog vs Edge[edit]

Many use the terms fog computing and edge computing (or edge processing) interchangeably. However there is a subtle but important difference as special purpose chips running AI/ML at edge start coming into use.

| Quote | Source |

|---|---|

| The key difference between the two architectures is exactly where that intelligence and computing power is placed,” he said. According to Newton:

Fog computing pushes intelligence down to the local area network level of network architecture, processing data in a fog node or IoT gateway. Edge computing pushes the intelligence, processing power and communication capabilities of an edge gateway or appliance directly into devices like programmable automation controllers (PACs). |

---What is edge computing and how it’s changing the network |

Edge computing AI/ML[edit]

We distinguish between cloud based services, on premise services and fog and edge . In our definition IoT gateways which are powerful full function Linux devices are used in a Fog architecture and core functionality can be delivered from that gateway. Edge computing is when the endpoint which maybe a limited function device with small amount of compute capacity ( even a MCU , not a Raspberry PI) and small amount of memory and may actually run on battery. Type of work done by the device can be limited for example in terms of RFID reader or could be a little more functional like limited vibration recognition for industrial devices. It may involve more significant process running inference with a miniature or lite AI/ML algorithm.

- See Architecture for the components of IoT Stack

| Quote | Source |

|---|---|

| "Fake" edge solutions claim they can process data at the edge, but really rely on sending data back to the cloud for batch or micro-batch processing. When one reads about edge computing, the fakes are recognized as those without a complex event processor (CEP), which means latency is higher and the data remains "dirty," making analytics much less accurate and ML models significantly compromised.

"Real" edge intelligence starts with a hyper-efficient CEP that cleanses, normalizes, filters, contextualizes and aligns "dirty" or raw streaming industrial data as it's produced. In addition, a "real" edge solution includes integrated ML and AI capabilities, all embedded into the smallest (and largest) computing footprints. |

Edge Computing and the Industrial Internet of Things in 2019 |

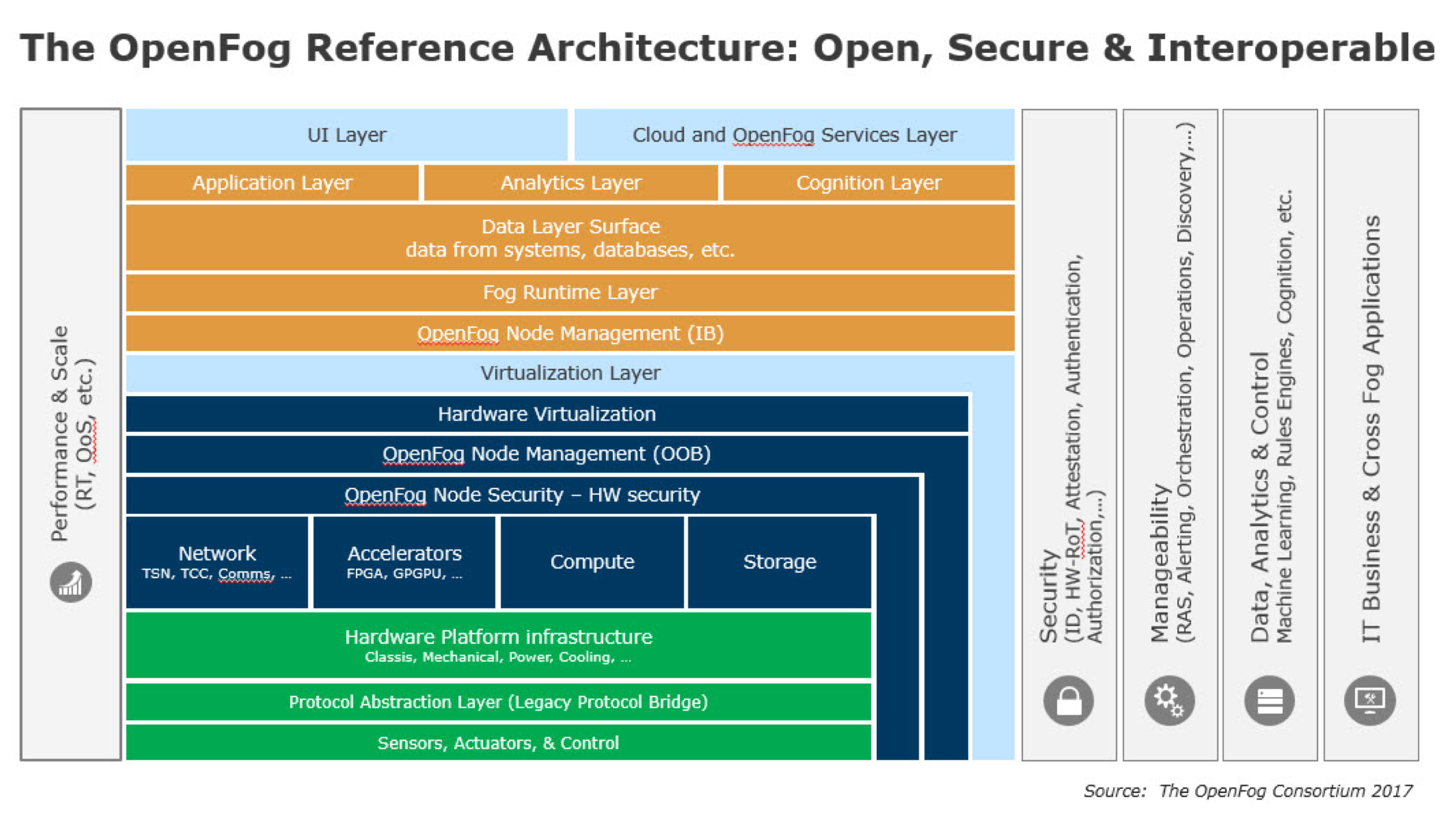

Open Fog Consortium[edit]

- OpenFog Consortium

Fog computing is a system-level horizontal architecture that distributes resources and services of computing, storage, control and networking anywhere along the continuum from Cloud to Things. It is a:

- Horizontal architecture: Support multiple industry verticals and application domains, delivering intelligence and services to users and business

- Cloud-to-Thing continuum of services: Enable services and applications to be distributed closer to Things, and anywhere along the continuum between Cloud and Things

- System-level: Extend from the Things, over the network edges, through the Cloud, and across multiple protocol layers – not just radio systems, not just a specific protocol layer, not just at one part of an end-to-end system, but a system spanning between the Things and the Cloud

- The Industrial Internet Consortium has taken over from the openFog Consortium and has a reference document. Introduction to Edge Computing in IIoT

See Also[edit]

- IoT Architecture

- The Fog computing paradigm: Scenarios and security issues

- Fog Computing vs. Edge Computing: What’s the Difference?

- Why IoT needs Fog Computing

- 7 Examples of Edge Computing

- Technical capabilities of an edge computing solution: Bosch