› Forums › IoTStack › News (IoTStack) › Google is making a fast specialized TPU chip for edge devices

Tagged: AIAnalytics_H13, FPGA_H3

- This topic has 0 replies, 1 voice, and was last updated 6 years, 4 months ago by

Curator Topics.

Curator Topics.

-

AuthorPosts

-

-

July 27, 2018 at 10:25 am #23320

At Google Cloud Next 2018 in SFO an edge device was announced

“

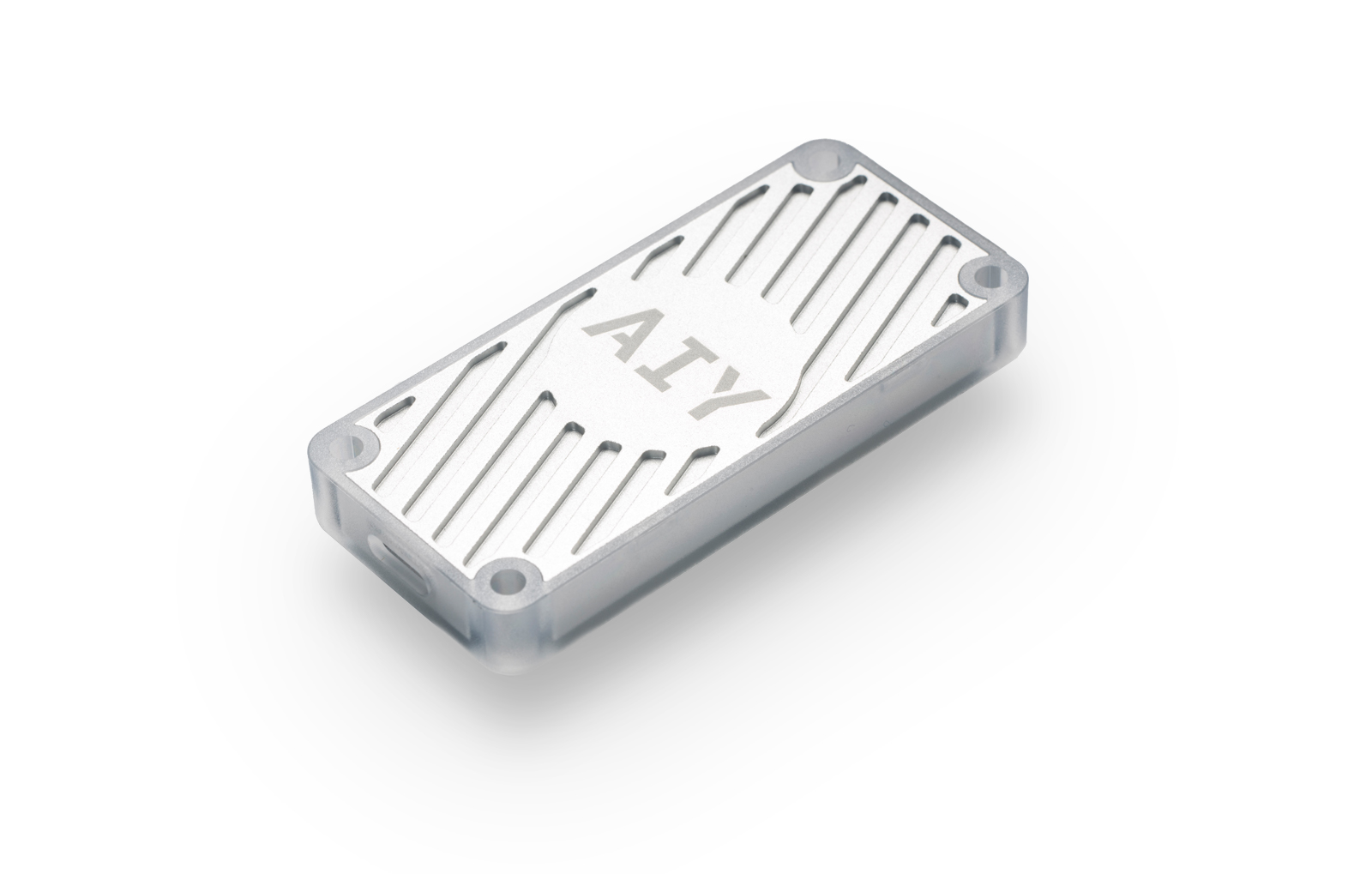

Today, we’re announcing two new products aimed at helping customers develop and deploy intelligent connected devices at scale:Edge TPU, a new hardware chip, andCloud IoT Edge, a software stack that extends Google Cloud’s powerful AI capability to gateways and connected devices. This lets you build and train ML models in the cloud, then run those models on the Cloud IoT Edge device through the power of the Edge TPU hardware accelerator.The Edge TPU is a small ASIC designed by Google that provides high performance ML inferencing for low-power devices. For example, it can concurrently execute multiple state-of-the-art vision models on high-res video at 30+ fps, in a power efficient manner. With one of the following Edge TPU devices, you can build embedded systems with on-device AI features that are fast, secure, and power efficient. The USB Accelerator is a small stick includes a USB Type-C socket that you can connect to any Linux-based system to perform accelerated ML inferencing. The casing includes mounting holes for attachment to host boards such as a Raspberry Pi Zero or your custom device.

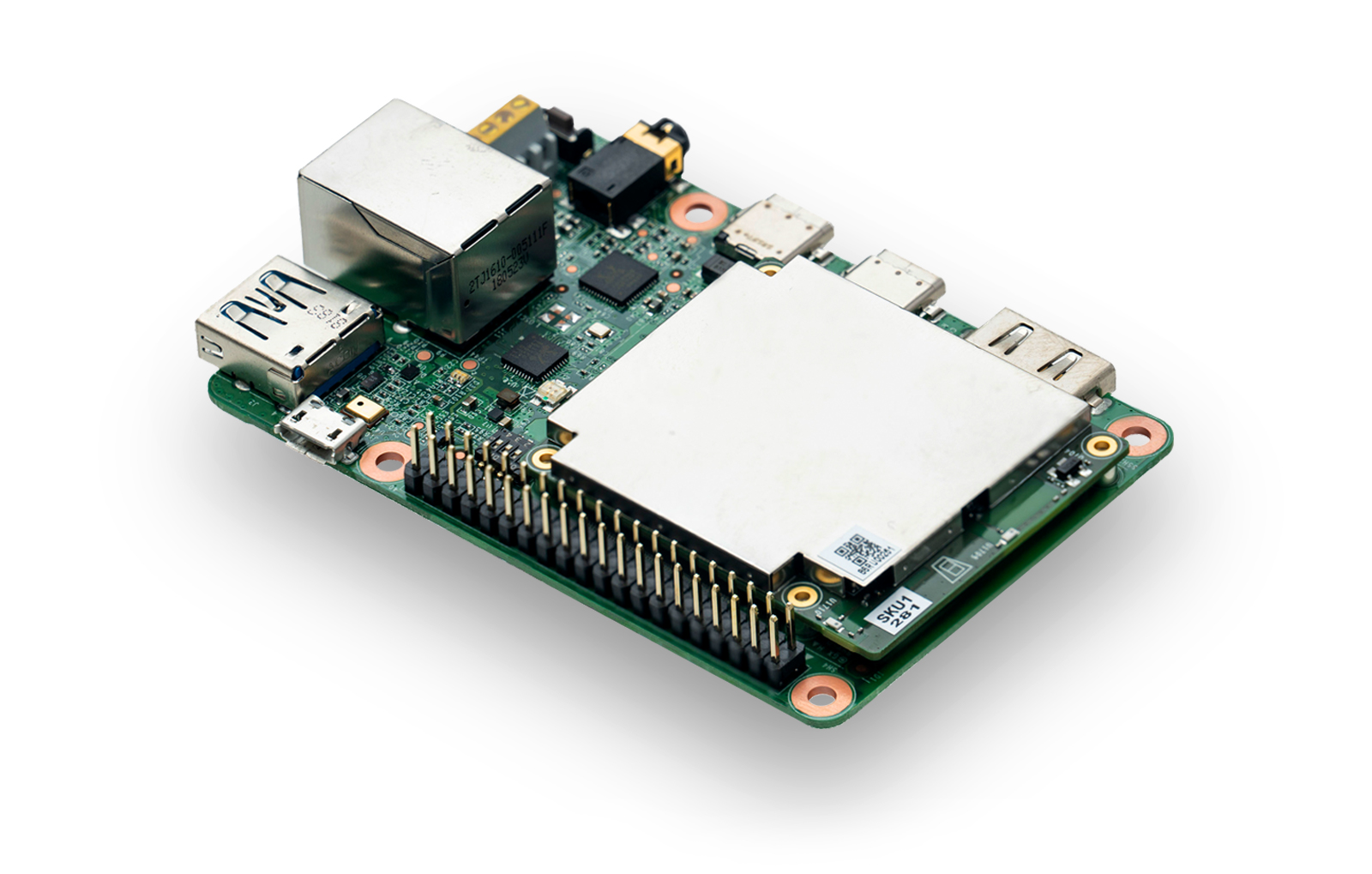

EDGE TPU MODULE (SOM) SPECIFICATIONS

CPU NXP i.MX 8M SOC (quad Cortex-A53, Cortex-M4F)

GPU Integrated GC7000 Lite Graphics

ML accelerator Google Edge TPU coprocessor

RAM 1 GB LPDDR4

Wireless Wi-Fi 2×2 MIMO (802.11b/g/n/ac 2.4/5GHz)

Bluetooth 4.1

Dimensions 40 mm x 48 mm

TechCrunch describes this asGoogle is exploiting an opportunity to split the process of inference and machine training into two different sets of hardware and dramatically reduce the footprint required in a device that’s actually capturing the data. That would result in faster processing, less power consumption and, potentially more importantly, a dramatically smaller surface area for the actual chip.Google also is rolling out a new set of services to compile TensorFlow (Google’s machine learning development framework) into a lighter-weight version that can run on edge devices without having to call the server for those operations. That, again, reduces the latency and could have any number of results, from safety (in autonomous vehicles) to just a better user experience (voice recognition). As competition heats up in the chip space, both from the larger companies and from the emerging class of startups, nailing these use cases is going to be really important for larger companies. That’s especially true for Google as well, which also wants to own the actual development framework in a world where there are multiple options like Caffe2 and PyTorch.

-

This topic was modified 6 years, 4 months ago by

Curator Topics.

Curator Topics.

-

This topic was modified 6 years, 3 months ago by

Curator 1 for Blogs.

-

This topic was modified 6 years, 4 months ago by

-

-

AuthorPosts

- You must be logged in to reply to this topic.