› Forums › IoTStack › News (IoTStack) › Battle of Edge AI — Nvidia vs Google vs Intel

- This topic has 1 voice and 0 replies.

-

AuthorPosts

-

-

December 1, 2019 at 7:00 am #37482

#News(IoTStack) [ via IoTGroup ]

Headings…

Battle of Edge AI — Nvidia vs Google vs Intel

PERFORMANCE, SIZE, POWER and COST

SOFTWARE APPLICATIONS

Intel® Neural Compute Stick 2

Learn AI programming at the edge with the newest generationAuto extracted Text……

Battle of Edge AI — Nvidia vs Google vs Intel SoonYau Follow Jun 14 · 9 min read

Edge AI is still new and many people are not sure which hardware platforms to choose for their projects.

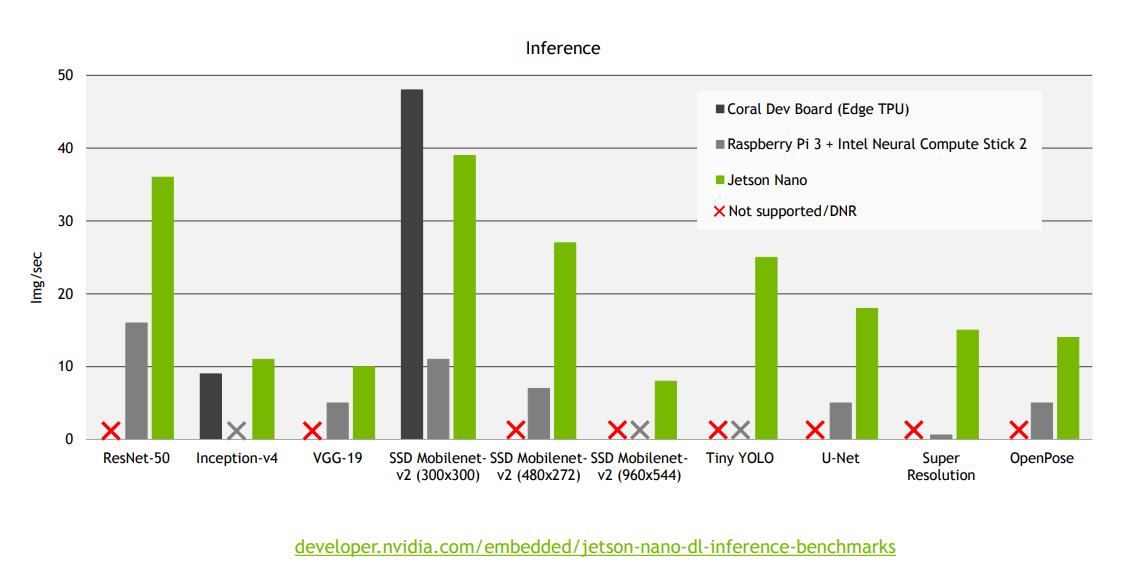

Nvidia did a good job of its competitions in the following benchmark comparisons, and we have — Intel Neural Computer Stick, Google Edge TPU and its very own Jetson Nano.

When evaluating AI models and hardware platform for real time deployment, the first thing I will look at is — how fast are they.

Nvidia performed some benchmarks where you can find the result in https://developer.nvidia.com/embedded/jetson-nano-dl-inference-benchmarks.

Jetson Nano’s numbers look good for real time inference, let’s use them as baseline.

Edge TPU could perform 130 FPS in classification and that is twice that of Nano’s!

If we start from the middle, the Coral Edge TPU dev board is exactly of credit card size and you can use that as reference to gauge the size.

Edge TPU Dev Board — $149.99(dev), 40 x 48mm

Both Jetson Nano and Edge TPU dev uses 5V power supplies, the former has power specification of 10W.

However, the heatsink in Edge TPU board is much smaller and it doesn’t run all time during the objection detection demo.

Coupled that with Edge TPU efficient hardware architecture, I guess the power consumption should be significantly lower than that of Jetson Nano.

Although Edge TPU appears to be most competitive in term of performance and size but it is also the most limiting in software.

This is the reason why there were so many DNR in Nvidia’s benchmark of Edge TPU.

As pioneer in AI hardware, Nvidia’s software is the most versatile as its TensorRT support most ML framework including MATLAB.

Having said that, if your application involves some non computer vision models e.g. recurrent network or you develop your own models with many custom layers, then it is safer to use Jetson series to avoid nasty surprise when porting trained models to embedded deployment

Read More..

AutoTextExtraction by Working BoT using SmartNews 1.0299999999 Build 26 Aug 2019

-

-

AuthorPosts

- You must be logged in to reply to this topic.